Strategic Approaches to Minimize Variance in RT-PCR Workflows: From Bench to Biomarker

This article provides a comprehensive guide for researchers and drug development professionals seeking to enhance the reliability and reproducibility of their Reverse Transcription Quantitative PCR (RT-qPCR) data.

Strategic Approaches to Minimize Variance in RT-PCR Workflows: From Bench to Biomarker

Abstract

This article provides a comprehensive guide for researchers and drug development professionals seeking to enhance the reliability and reproducibility of their Reverse Transcription Quantitative PCR (RT-qPCR) data. It systematically addresses the critical pillars of a robust RT-qPCR workflow, beginning with an exploration of the fundamental sources of technical and biological variation. The content then progresses to detailed methodological protocols for sample preparation, cDNA synthesis, and qPCR setup, followed by advanced troubleshooting and optimization strategies for common pitfalls. Finally, the guide covers rigorous validation frameworks, data normalization techniques, and comparative analyses of laboratory-developed versus commercial assays, all aligned with international MIQE guidelines to ensure data integrity and translational relevance.

Understanding the Multifactorial Sources of RT-PCR Variance

Defining Technical vs. Biological Variance in Molecular Assays

In quantitative molecular assays, particularly Reverse Transcription Polymerase Chain Reaction (RT-PCR), identifying the source of variation is the first critical step towards ensuring the reliability and reproducibility of your results. Variance can be broadly categorized as either technical or biological. Technical variance arises from the experimental procedures and measurement systems themselves. In contrast, biological variance reflects the true, natural differences in target quantity between different samples or subjects within the same experimental group [1].

Understanding and minimizing technical variance is paramount because it can obscure true biological signals and lead to incorrect conclusions. This guide provides a clear framework for distinguishing between these variance types, offers troubleshooting strategies for common issues, and outlines protocols to reduce variability in your RT-PCR workflows.

Core Definitions

- Technical Variance: This is the variation inherent to the measuring system and its procedures. It can be estimated by assaying multiple aliquots of the same biological sample, known as technical replicates [1]. Sources include pipetting inaccuracy, instrument performance, reagent efficiency, and operator technique.

- Biological Variance: This is the true variation in the target quantity among different biological samples or subjects belonging to the same group (e.g., control vs. treated) [1]. This variance is accounted for by using distinct biological replicates, which are biologically independent samples that undergo the entire experimental process separately.

Quantitative Comparison of Variance Components

The table below summarizes the key characteristics and contributors of technical and biological variance.

Table 1: Key Characteristics of Technical and Biological Variance

| Feature | Technical Variance | Biological Variance | |

|---|---|---|---|

| Definition | Variation from the experimental measurement process | Natural variation between individual biological subjects | |

| Estimated Via | Technical replicates (multiple measurements of the same sample) [1] | Biological replicates (measurements from different subjects in the same group) [1] | |

| Common Sources | - Pipetting inaccuracy [1] [2]- Instrument calibration & performance [1]- Reagent quality & lot-to-lot variability [3]- Operator technique [1]- Amplification efficiency differences [4] | - Genetic heterogeneity of subjects- Physiological state (age, metabolism)- Environmental exposure differences | - Sample collection time points |

| Impact on Results | Reduces precision and can inflate or mask true biological differences [1] | Determines the true effect size and the generalizability of findings to a population [1] |

A large-scale proficiency testing survey highlights how specific technical factors contribute to result variability in viral load testing. The relative contribution of these factors can differ depending on the specific target being measured.

Table 2: Factors Contributing to Inter-Laboratory Variability in Viral Load PCR (CAP Survey Data) [3]

| Factor | Impact on Result Variability (RV) |

|---|---|

| Commercially prepared primers and probes | Made the largest contribution to overall variability |

| Amplification target gene | Prominently associated with changes in RV |

| Selection of quantitative calibrator | Associated with changes in mean viral load (MVL) and RV |

| Sample preparation method | Contributes to overall MVL and RV |

Troubleshooting Guides & FAQs

Frequently Asked Questions (FAQs)

Q1: My RT-qPCR results show high variability between technical replicates. What are the most likely causes? High variation between technical replicates (e.g., high standard deviation or coefficient of variation among wells containing the same sample) is a classic sign of technical issues [1]. Key areas to investigate are:

- Pipetting Technique: Ensure pipettes are calibrated and used correctly with well-fitting tips. Pay special attention when pipetting viscous liquids [1].

- Reaction Setup: Visually check for consistent liquid volumes in all wells after plate loading. Centrifuge the sealed plate to consolidate samples and remove air bubbles [1].

- Instrument Performance: Verify that your thermal cycler block temperature is uniform and calibrated. Check the optical system for anomalies [1].

- Master Mix Homogeneity: Ensure all reaction components are thoroughly mixed before aliquoting.

Q2: How can I determine if my RNA quality is contributing to variance in gene expression results? Poor RNA integrity is a major source of both technical and biological misinterpretation [5].

- Assessment: Always assess RNA integrity prior to cDNA synthesis using methods like gel electrophoresis (to observe sharp ribosomal RNA bands) or microfluidic analysis (e.g., RIN > 7 for most applications) [5].

- Impact: Degraded RNA can lead to truncated cDNA, reduced amplification efficiency, and an underestimation of transcript levels, especially for longer transcripts or those with low abundance [5].

- Prevention: Minimize freeze-thaw cycles, use RNase-free reagents and techniques, and include an RNase inhibitor during reverse transcription [5].

Q3: When I see a statistically significant fold-change, how do I know if it is biologically relevant? Statistical significance and biological relevance are distinct concepts.

- Statistical Significance: A significant p-value (e.g., < 0.05) from a t-test or ANOVA indicates that the observed fold-change is unlikely to be due to random chance (experimental variation) alone [1].

- Biological Relevance: This requires researcher judgment based on the biological context. With sufficient replicates and low variability, very small fold-changes can be statistically significant. However, in eukaryotic gene expression, a two-fold change is often considered a minimum threshold for physiological significance [1]. Always interpret your p-values in the context of the actual fold-change and the known biology of your target.

Troubleshooting Common RT-PCR Problems

Table 3: Troubleshooting Common RT-PCR Issues Related to Variance

| Problem | Possible Technical Cause | Possible Biological Cause | Solutions |

|---|---|---|---|

| Low or No Amplification [5] [6] | - Poor reverse transcription efficiency- PCR inhibitors in sample- Suboptimal PCR conditions (Mg²⁺, annealing temp)- Inactive enzyme | - Very low abundance of target transcript- Degraded RNA sample | - Check RNA integrity and quantity [5]- Use a high-performance, inhibitor-resistant reverse transcriptase [5]- Optimize PCR conditions and include positive control [6] |

| Non-Specific Products (e.g., Primer-Dimers) [7] [6] | - Poor primer design- Annealing temperature too low- Primer concentration too high | - Presence of highly homologous gene sequences in the sample | - Redesign primers with stricter criteria- Use a hot-start polymerase [6]- Increase annealing temperature [6]- Use probe-based chemistry (e.g., TaqMan) instead of SYBR Green [7] |

| High Variability Between Replicates [1] | - Inconsistent pipetting- Inhomogeneous reaction mix- Air bubbles in wells- Uneven plate sealing | - Not applicable (this is a technical issue by definition) | - Practice and verify good pipetting technique [1]- Vortex and centrifuge all reagents and the sealed plate [1]- Run technical replicates to measure and control for this variance |

Experimental Protocols for Variance Reduction

Protocol: Designing an Experiment with Optimal Replication

Objective: To control for both technical and biological variance, allowing for statistically robust and biologically meaningful conclusions.

Methodology:

- Define Biological Replicates: These are the cornerstone of your experiment. They represent independent biological units (e.g., different animals, primary cell cultures from different donors, independently grown plants). The number of biological replicates determines your power to detect a true biological effect. A minimum of n=3 is recommended, but more may be needed for heterogeneous samples [4].

- Define Technical Replicates: These are multiple measurements (e.g., wells on a plate) of the same biological sample extract. They help account for variability in the qPCR process itself. In basic research, running triplicate technical replicates is common [1].

- Experimental Workflow: Each independent biological replicate is processed separately through RNA extraction, DNase treatment, and reverse transcription. The resulting cDNA from each biological replicate is then aliquoted into multiple technical replicates for the qPCR step.

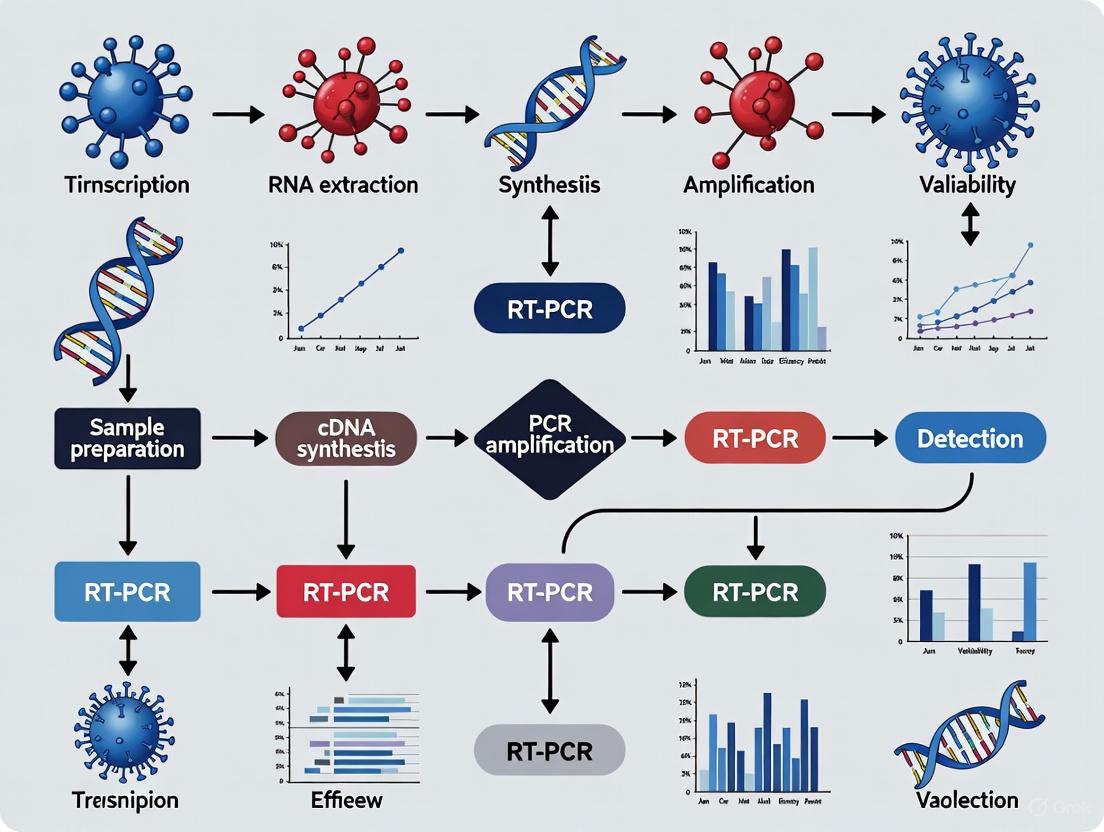

Diagram: Experimental Workflow for Robust RT-qPCR

Protocol: Statistical Analysis of qPCR Data for Variance Assessment

Objective: To correctly analyze qPCR data, compare treatment groups, and account for sources of variation.

Methodology: [4]

- Data Transformation: qPCR data (Normalized Relative Quantities or NRQs) are not normally distributed. Apply a log transformation (base 2 or 10) to the NRQ data to create a new variable, often called Cq'. This transformation stabilizes the variance and makes the data suitable for parametric statistical tests.

Cq' = -log₂(NRQ)[4] - Statistical Testing: Use Analysis of Variance (ANOVA) to compare the Cq' values across your biological groups. ANOVA allows you to assess the variation due to your treatment factors (main effects) and can account for block effects like inter-plate variation [4].

- Interpretation: A significant result (p < 0.05) indicates that the observed differences in gene expression between groups are unlikely to be due to random biological and technical variation alone, and are likely caused by the experimental treatment [1].

The Scientist's Toolkit: Key Reagent Solutions

Table 4: Essential Research Reagents for Variance Reduction

| Reagent / Material | Function | Impact on Variance |

|---|---|---|

| High-Performance Reverse Transcriptase | Synthesizes cDNA from RNA templates. | Reduces technical variance from inefficient or inhibited cDNA synthesis, especially for long transcripts or low-abundance targets [5]. |

| Hot-Start DNA Polymerase | Prevents non-specific amplification during PCR setup. | Reduces technical variance from primer-dimer formation and non-specific products, improving assay specificity and precision [6]. |

| Passive Reference Dye | Normalizes for non-PCR-related fluorescence fluctuations. | Corrects for variations in reaction volume and optical anomalies, improving inter-well precision [1]. |

| DNase I (RNase-free) | Removes contaminating genomic DNA from RNA samples. | Prevents false positives and overestimation of transcript levels, a source of technical bias [5]. |

| Standardized Calibrators / Reference Materials | Provides a known quantity of target for generating standard curves. | Reduces inter-laboratory and inter-assay variability by providing a common benchmark for quantification [3]. |

| Multiplex qPCR Assays | Allows simultaneous amplification of multiple targets in a single well. | When a reference gene is included in the multiplex, normalizing target data from the same well provides a precision correction, reducing technical variance [1]. |

The reliability of any experimental result, particularly in molecular diagnostics and research, is heavily dependent on the steps taken before the actual analysis begins. The pre-analytical phase encompasses all processes from sample collection to the point of analysis. Evidence indicates that up to 75% of all laboratory errors originate in this phase, making it the most significant contributor to overall variability and a critical focus for quality improvement [8]. For techniques like RT-PCR, which are central to gene expression analysis, viral load testing, and diagnostic assays, controlling pre-analytical variables is paramount for obtaining accurate, reproducible, and clinically or scientifically valid data.

This guide details the critical pre-analytical variables within the context of a broader thesis on RT-PCR workflow variance reduction strategies. It is structured to serve as a technical support resource, providing troubleshooting guides and FAQs to help researchers, scientists, and drug development professionals identify and mitigate specific issues encountered during experiments.

Sample Collection & Handling: Troubleshooting Guide

Errors introduced during sample collection and handling are often irreversible and can compromise all subsequent steps.

Frequently Asked Questions

Q1: Why is my serum potassium level falsely elevated in a non-hemolyzed specimen? A: Pseudohyperkalemia can be caused by patient activity during phlebotomy. Repeated fist clenching with a tourniquet applied can cause a 1-2 mmol/L increase in potassium due to potassium efflux from forearm muscle cells. In one documented case, this led to a serum potassium reading of 6.9 mmol/L in an outpatient setting, while levels taken via an in-dwelling catheter in a hospital were normal (3.9-4.5 mmol/L) [9]. Solution: Instruct patients to avoid fist clenching. Release the tourniquet within one minute of application.

Q2: Why are my coagulation test results (e.g., PT, aPTT) artificially prolonged?

A: A common cause is an improper blood-to-anticoagulant ratio. This occurs with underfilled blood collection tubes or in patients with a high hematocrit (>0.55). The excess anticoagulant causes an over-abundance of citrate, leading to only partial recalcification and prolonged clotting times [10].

Solution: Ensure tubes are filled to the correct volume. For patients with elevated hematocrit, use the following formula to adjust the volume of 3.2% trisodium citrate:

C (ml) = (1.85 x 10^-3) x (100 - Hct(%)) x V (ml)

Where C is the volume of citrate and V is the volume of whole blood in the tube [10].

Q3: My hematology results (e.g., hemoglobin, WBC) are erratic and inconsistent when run from the same tube. What could be wrong? A: This may be due to improper mixing. If a sample tube is overfilled, the air bubble necessary for proper mixing on a rocking mixer cannot move effectively. This leads to inadequate resuspension of cells and erroneous results [9]. Solution: Always collect samples to the designated fill volume. If a tube is overfilled, remove a small volume of blood to create an air bubble before mixing.

Table 1: Optimal Blood Sample Volumes for Different Laboratory Tests [9]

| Test Category | Sample Type | Recommended Volume |

|---|---|---|

| Clinical Chemistry (20 analytes) | Heparinized Plasma | 3 - 4 mL whole blood |

| Clinical Chemistry (20 analytes) | Serum | 4 - 5 mL clotted blood |

| Hematology | EDTA Blood | 2 - 3 mL whole blood |

| Coagulation Tests | Citrated Blood | 2 - 3 mL whole blood |

| Immunoassays | EDTA Blood | 1 mL whole blood (for 3-4 assays) |

| Erythrocyte Sedimentation Rate | Citrated Blood | 2 - 3 mL whole blood |

| Blood Gases (Capillary) | Arterial Blood | 50 µL |

| Blood Gases (Venous) | Heparinized Blood | 1 mL |

Patient & Sample Specific Variables

Biological and physiological factors introduce variability that must be recognized and controlled where possible.

Frequently Asked Questions

Q4: Can a patient's diet really affect laboratory results? A: Yes, profoundly. Food ingestion is a significant source of pre-analytical variability. For example, glucose and triglycerides increase after meals. A specific case involved a 75-year-old woman who presented with hypernatremia (serum sodium of 162 mmol/L) and confusion after consuming several bowls of soup, which caused a massive sodium intake [9]. Solution: Implement and communicate strict patient preparation protocols, including overnight fasting (10-14 hours) for specific tests and dietary restrictions where necessary [8].

Q5: What physiological factors outside of disease can influence my results? A: Multiple factors can cause variation, including:

- Posture: Shifting from lying to standing can increase serum concentrations of proteins by ~9% within 10 minutes.

- Circadian Rhythm: Analytes like iron, potassium, cortisol, and renin exhibit significant diurnal variation.

- Exercise: Strenuous exercise can increase muscle-derived enzymes like creatine kinase and aldolase.

- Pregnancy and Gender: Pregnancy can reduce Protein S levels, and females generally have different baseline levels of some clotting factors compared to males [10] [8]. Solution: Standardize the time of sample collection, document patient posture and recent activity, and use gender- or condition-specific reference ranges where appropriate.

RNA Integrity & PCR-Specific Variables

For RT-PCR and related molecular techniques, the quality of the nucleic acid template is a fundamental pre-analytical factor.

Frequently Asked Questions

Q6: What is the biggest source of variability in qPCR or RNA-Seq workflows? A: A poll of researchers identified Reverse Transcription (cDNA synthesis) and Amplification as the top sources of variability. These steps are known to introduce errors and amplification bias. Bioinformatics/Data Analysis and Normalization were also highly rated as significant contributors [11].

Q7: My PCR has low yield or no product. What should I check in my template DNA/RNA? A: The integrity and purity of your nucleic acid template are critical [12]. Solution:

- Poor Integrity: Minimize shearing during isolation. Assess integrity by gel electrophoresis. Store DNA in molecular-grade water or TE buffer (pH 8.0).

- Low Purity: Ensure no residual PCR inhibitors (phenol, EDTA, proteinase K, salts) are present. Re-purify or ethanol-precipitate the template. Use polymerases with high inhibitor tolerance.

- Insufficient Quantity: Increase the amount of input template or the number of PCR cycles. Use a more sensitive DNA polymerase.

- Complex Targets (GC-rich): Use a PCR additive (e.g., DMSO, GC Enhancer), increase denaturation temperature/time, and choose a polymerase with high processivity [12].

Q8: How can I systematically optimize my qPCR assay for maximum accuracy? A: An optimized, stepwise protocol is essential for achieving high efficiency and specificity [13]. Experimental Protocol: Stepwise qPCR Optimization [13]

- Primer Design: For plants and species with homologous genes, design primers based on Single-Nucleotide Polymorphisms (SNPs) to ensure specificity. Avoid relying solely on computational tools without validation.

- Annealing Temperature Optimization: Use a gradient thermal cycler to test a range of temperatures (e.g., 1–2°C increments) around the predicted Tm.

- Primer Concentration Optimization: Test primer concentrations in the range of 0.1–1 μM to find the concentration that minimizes primer-dimer formation and maximizes signal.

- cDNA Concentration Curve: Run a standard curve with a serial dilution (e.g., 1:10, 1:100, 1:1000) of your cDNA to determine the optimal dynamic range for each primer pair.

- Validation Criteria: The optimal primer pair for each gene should yield a standard curve with R² ≥ 0.999 and an amplification efficiency (E) of 100 ± 5%. This is a prerequisite for reliable use of the 2−ΔΔCt method for analysis [13].

Q9: How can I assess RNA integrity in complex samples like wastewater? A: RNA integrity is not uniform across the genome. A Long-Range Reverse Transcription digital PCR (LR-RT-dPCR) method can be used. Experimental Protocol: Assessing RNA Integrity with LR-RT-dPCR [14]

- Long-Range RT: Perform reverse transcription using a single specific primer at the 3' end to generate a long, contiguous cDNA strand.

- Sample Partitioning: Partition the cDNA sample into many individual reactions via digital PCR.

- Multiplex Amplification: Perform a multiplex PCR amplification on targets located at the 3' end, middle, and 5' end of the genome within the partitioned samples.

- Analysis: The detection frequency of the different fragments indicates the integrity of the RNA. More degraded RNA will show lower detection frequencies for fragments further from the 3' end. This method has been successfully applied to assess the integrity of SARS-CoV-2 RNA in wastewater [14].

Diagram: Pre-Analytical Phase Workflow

The following diagram maps the key stages and decision points in the pre-analytical phase, highlighting where critical errors can occur.

The Scientist's Toolkit: Research Reagent Solutions

Selecting the right reagents is critical for minimizing variability. The following table details key solutions for robust RT-PCR.

Table 2: Key Research Reagent Solutions for RT-PCR Workflows

| Reagent / Material | Function / Purpose | Key Considerations for Variance Reduction |

|---|---|---|

| Hot-Start DNA Polymerase | Enzyme for PCR amplification that is inactive at room temperature. | Prevents non-specific amplification and primer-dimer formation during reaction setup, enhancing specificity and yield [12]. |

| Sequence-Specific Primers | Oligonucleotides designed to bind specifically to the target sequence. | Design must be based on aligned homologous sequences and SNPs to ensure target specificity and avoid off-target binding [13]. |

| PCR Additives (e.g., DMSO, GC Enhancer) | Co-solvents that help denature complex DNA secondary structures. | Critical for amplifying GC-rich targets or sequences with stable secondary structures; requires concentration optimization [12]. |

| dNTP Mix | The four nucleotide building blocks (dATP, dCTP, dGTP, dTTP) for DNA synthesis. | Must be provided in equimolar concentrations to prevent misincorporation errors and ensure high fidelity amplification [12]. |

| Magnesium Salt (MgCl₂/MgSO₄) | Essential cofactor for DNA polymerase activity. | Concentration must be optimized for each primer-template system; excess Mg²⁺ can reduce fidelity and increase non-specific binding [12]. |

| RNA Stabilization Reagents | Chemicals that immediately inhibit RNases upon sample collection. | Preserves RNA integrity from the moment of collection, critical for accurate gene expression analysis [14]. |

| Standardized Quantitative Calibrators | Reference materials with known analyte concentrations. | Allows for calibration across different labs and platforms, significantly reducing inter-laboratory variability in quantitative assays like viral load testing [3]. |

The Impact of Reagent Sourcing and Enzyme Selection on Assay Reproducibility

Troubleshooting Guide: Addressing Common Reproducibility Issues

Why do my RT-qPCR results show poor reproducibility between experiments, even when using the same sample?

Poor reproducibility in RT-qPCR often stems from the reverse transcription (RT) step, which introduces significant, often overlooked, quantitative biases.

- Primary Cause: Enzyme- and gene-specific biases in reverse transcription. Different reverse transcriptase enzymes exhibit varying efficiencies when reverse transcribing different RNA targets, leading to inconsistent cDNA yields [15]. One study demonstrated that a 2-fold increase in RNA input into an RT reaction resulted in an average Cq decrease of only 0.39, substantially lower than the theoretical decrease of 1.0, indicating significant non-linearity [15].

- Solution:

- Validate your RT enzyme: Perform dilution series with your specific RNA targets and chosen RT enzyme to assess linearity [15].

- Use a consistent priming strategy: Reactions primed by target-specific primers are linear over a wider range than those primed by random primers [16]. Consistently use the same priming strategy (oligo-dT, random hexamers, or gene-specific) across all experiments [16].

- Include RT controls: Run RT reactions at least in duplicates starting from the RNA template to account for variability inherent to the RT step [16].

Why do I get different gene expression results when I use different commercial reverse transcription kits?

Different RT kits contain distinct reverse transcriptase enzymes and optimized buffer compositions, which can dramatically alter the efficiency of cDNA synthesis for specific targets.

- Primary Cause: Reverse transcriptase-specific bias. Research shows that commercial RT kits can produce opposing results for the same sample [15]. For example, when comparing two kits (iScript and Transcriptor), one kit showed a compressed Cq increase for 5.8S rRNA but not U1 snRNA, while the other kit showed the reverse pattern [15]. This can lead to an apparent differential expression of over 5-fold between dilutions of the same RNA depending on the kit used [15].

- Solution:

- Kit Consistency: Once a project is initiated, use the same RT kit and lot number throughout the study to minimize inter-experiment variability.

- Report Kit Details: Adhere to MIQE guidelines by thoroughly documenting the RT kit, enzyme, and protocol used in your publications [15].

- Empirical Validation: Do not assume kit performance; validate your specific targets with the chosen kit.

How does RNA integrity affect my assay reproducibility, and how can I mitigate this?

Compromised RNA integrity is a major source of variability, but its effect is not uniform across all targets, leading to skewed results.

- Primary Cause: Amplicon-specific bias from degraded RNA. Partly degraded RNA samples affect different targets unevenly. In one experiment, most amplicons showed expected Cq value increases with degraded template, but the U1 snRNA amplicon showed a decrease, likely due to its structured nature and higher resistance to degradation [15]. Normalizing a target gene to a reference gene that responds differently to degradation can create a false differential expression of ~2-fold between intact and fragmented samples of the same RNA [15].

- Solution:

- Quality Control: Rigorously assess RNA Integrity Numbers (RIN) using automated electrophoresis systems and set a minimum acceptable threshold for your experiments.

- Assess Target Stability: Evaluate how your genes of interest and proposed reference genes behave under different RNA integrity conditions [16] [15].

- Use Multiple Reference Genes: Employ a panel of empirically validated reference genes to dampen technical and biological spurious readings, rather than relying on a single gene [15].

What causes non-specific amplification and high background in my PCR, and how can I improve specificity?

Non-specific products and primer-dimers often result from suboptimal reaction conditions and enzyme activity at low temperatures.

- Primary Cause: Premature enzyme activity and suboptimal cycling conditions. DNA polymerases can exhibit activity at room temperature, leading to non-specific priming and primer-dimer formation before the PCR begins [12] [17].

- Solution:

- Use Hot-Start Polymerases: Employ hot-start DNA polymerases that remain inactive until a high-temperature activation step, dramatically improving specificity [12] [17] [18].

- Optimize Annealing Temperature: Use a gradient thermal cycler to determine the optimal annealing temperature for each primer set [12] [17]. Increase the annealing temperature stepwise in 1-2°C increments if non-specific amplification occurs [12].

- Optimize Mg²⁺ Concentration: Adjust Mg²⁺ concentration in 0.2-1 mM increments, as excessive concentrations can promote non-specific binding [12] [17].

- Use PCR Additives: For GC-rich templates, use additives like DMSO (1-10%), glycerol, or formamide (1.25-10%) to help denature secondary structures and improve specificity [18].

Frequently Asked Questions (FAQs)

Q: How critical is master mix selection for assay reproducibility?

Master mix selection is fundamental for reproducible results. Real-time PCR master mixes are pre-mixed solutions containing essential components like buffers, enzymes, and dNTPs [19]. However, different formulations are optimized for specific applications (e.g., gene expression, genotyping, pathogen detection) [19]. Using a master mix not validated for your specific application can introduce variability. For maximum reproducibility, choose a master mix designed for your application and use the same product and lot number across all experiments in a study.

Q: What are the key factors to consider when selecting a DNA polymerase for high-fidelity applications?

For applications like cloning, sequencing, or mutagenesis, polymerase fidelity is critical. Key considerations include:

- Proofreading Activity: DNA polymerases with 3'→5' exonuclease activity (e.g., Pfu, Q5) have significantly higher fidelity and lower error rates than those without (e.g., Taq) [17] [18].

- Error Rate: Taq polymerase has an error rate between 2×10⁻⁴ to 2×10⁻⁵ errors/base/doubling, while high-fidelity archaeal polymerases have much lower error rates [18].

- Reaction Conditions: Excess Mg²⁺ concentration and unbalanced dNTP concentrations can increase error rates, even with high-fidelity enzymes [12].

Q: How can automation improve the reproducibility of my PCR workflows?

Automation significantly enhances reproducibility by:

- Reducing Human Error: Automated liquid handling systems ensure precise, consistent reagent dispensing across all wells and plates [20].

- Eliminating Contamination: Minimizes manual pipetting steps that can introduce cross-contamination.

- Standardizing Protocols: Automated systems execute identical protocols repeatedly, removing technician-to-technician variability [20].

- Improving Data Analysis: Automated analysis platforms can record cycle thresholds, flag abnormal amplification curves, and generate standardized reports, reducing interpretation biases [20].

Q: What is the impact of temperature control on enzyme assay reproducibility?

Temperature stability is paramount for reproducible enzyme kinetics. Just a one-degree temperature change can lead to a 4-8% variation in enzyme activity [21]. For PCR and enzyme assays, ensure your thermal cycler or incubator is properly calibrated and maintains stable, uniform temperatures across all samples. For microplate-based assays, be aware of "edge effects" where circumferential wells evaporate faster than central wells, causing temperature and concentration inconsistencies [21].

Table 1: Impact of Technical Variables on Assay Reproducibility

| Variable | Impact on Reproducibility | Quantitative Effect | Recommended Optimization |

|---|---|---|---|

| Reverse Transcription | High variability in cDNA synthesis efficiency | 2-fold RNA input increase → Cq decrease of only 0.39 (theoretical=1.0) [15] | Use consistent priming strategy; include RT duplicates [16] |

| RNA Integrity | Degradation affects targets differentially | Cq increase of 2.00 for eEF1A1 vs 0.68 decrease for U1 in degraded RNA [15] | Set minimum RIN threshold; validate reference gene stability [15] |

| Temperature Control | Direct impact on enzyme activity | 1°C change → 4-8% variation in enzyme activity [21] | Use calibrated equipment; avoid microplate edge effects [21] |

| DNA Polymerase Fidelity | Affects error rate in amplified products | Taq error rate: 2×10⁻⁴ to 2×10⁻⁵ errors/base/doubling [18] | Use high-fidelity enzymes with proofreading for cloning/sequencing [17] |

| Annealing Temperature | Critical for amplification specificity | Suboptimal temperature causes nonspecific products and primer-dimers [12] | Optimize using gradient PCR in 1-2°C increments [12] |

Table 2: Reproducibility Challenges in Biomedical Research

| Reproducibility Issue | Prevalence | Impact |

|---|---|---|

| General Irreproducibility | 50-89% of published biomedical research [16] | Wasted research funding; slowed medical advances [16] |

| Inadequate Reporting | 40% of in vivo studies lack complete animal characteristics [16] | Inability to replicate or validate experimental conditions |

| Resource Identification | 54% of resources in publications cannot be adequately identified [16] | Impossible to source identical reagents for replication |

| RT-qPCR Bias | Apparent false differential expression >5-fold with different RT kits [15] | Erroneous conclusions about gene expression changes |

Experimental Protocols

Protocol 1: Systematic Evaluation of Reverse Transcription Linearity

Purpose: To quantify the linearity and efficiency of your reverse transcription step for specific gene targets, identifying potential biases before main experiments [15].

Materials:

- High-quality total RNA sample

- Selected reverse transcription kit(s)

- Real-time PCR system and reagents

- Primers for your genes of interest and reference genes

Procedure:

- Prepare a 2-fold dilution series of your RNA sample (e.g., 75 ng, 150 ng, 300 ng, 600 ng) in nuclease-free water.

- For each RNA concentration, perform reverse transcription according to your kit's protocol. Include a no-RT control for each concentration to detect genomic DNA contamination.

- Prepare a 2-fold dilution series of each resulting cDNA (e.g., undiluted, 1:2, 1:4).

- Run qPCR for all your targets across all cDNA dilutions and RNA input concentrations.

- Data Analysis: Calculate the Cq change for each 2-fold dilution. The theoretical value is 1.0. Values significantly lower indicate compression and non-linearity in your RT step for that specific target [15].

Protocol 2: Design of Experiments (DoE) for Rapid Enzyme Assay Optimization

Purpose: To efficiently identify optimal assay conditions and factor interactions in less time than traditional one-factor-at-a-time approaches [22].

Materials:

- Purified enzyme of interest

- Substrates and buffers

- Plate reader or discrete analyzer capable of kinetic measurements

Procedure:

- Define Factors and Ranges: Identify critical factors (e.g., pH, ionic strength, Mg²⁺ concentration, temperature, enzyme concentration) and their test ranges based on literature and preliminary experiments.

- Fractional Factorial Design: Use statistical software to create an experimental design that screens multiple factors simultaneously to identify the most significant ones.

- Response Surface Methodology: After identifying key factors, design a second set of experiments to model the response surface and locate the optimum conditions.

- Validation: Confirm the predicted optimum with experimental replicates.

This approach exemplified with human rhinovirus-3C protease optimization, can identify optimal conditions in less than 3 days compared to over 12 weeks using traditional methods [22].

Workflow Visualization

Assay Reproducibility Workflow

This workflow outlines the systematic approach to achieving reproducible results in RT-PCR assays, highlighting critical decision points at each stage where reagent sourcing and enzyme selection significantly impact reproducibility.

Research Reagent Solutions

Table 3: Essential Reagents for Reproducible RT-PCR

| Reagent Category | Function | Key Selection Criteria | Reproducibility Impact |

|---|---|---|---|

| Reverse Transcriptase | Converts RNA to cDNA for amplification | Enzyme source, processivity through secondary structures, priming preference (oligo-dT/random/gene-specific) | High: Different enzymes show gene-specific biases; kit choice can cause >5-fold expression differences [15] |

| DNA Polymerase | Amplifies DNA template | Fidelity (error rate), thermostability, hot-start capability, processivity (bases incorporated/second) | High: Affects specificity, yield, and accuracy of amplified products; critical for downstream applications [12] [18] |

| Master Mix | Provides optimized reaction environment | Buffer composition, Mg²⁺ concentration, stabilizers, inclusion of additives | Medium-High: Pre-mixed solutions reduce pipetting variability but must be matched to application [19] |

| dNTPs | Building blocks for DNA synthesis | Purity, concentration balance, stability | Medium: Unbalanced concentrations increase PCR error rates; degradation affects yield [12] |

| Mg²⁺ Solution | Cofactor for polymerase activity | Concentration, salt type (Cl⁻ vs SO₄²⁻) | High: Concentration affects enzyme activity, specificity, and fidelity; optimal range is narrow [12] [18] |

| PCR Additives | Modify nucleic acid properties | Type (DMSO, formamide, BSA, betaine), concentration | Medium: Can improve specificity and yield for difficult templates but require optimization [18] |

FAQ: How Thermocycler Performance Introduces Variability

1. How does the thermal cycler's block uniformity affect my qPCR results? The precision with which a thermal cycler maintains temperature uniformity across all wells in its block is a critical source of variability. Even minor inconsistencies can lead to differences in amplification efficiency between samples. This is because the annealing and denaturation steps are highly temperature-sensitive. Non-uniform heating can cause some reactions to proceed less efficiently or not at all, skewing quantification cycles (Cqs) and compromising the accuracy of your quantitative data [23].

2. Can the thermocycler's ramp rate impact my assay? Yes, the speed at which the instrument transitions between temperatures (ramp rate) can influence reaction specificity and efficiency. While faster ramp rates can reduce overall run time, they may not provide sufficient time for complete primer annealing or enzyme binding in some assays, potentially leading to reduced yield or the formation of non-specific products. It is essential to validate that your specific PCR protocol is compatible with the instrument's ramp rate capabilities [24].

3. Why is the optical detection system of a real-time PCR thermocycler important? In real-time qPCR, the optical system is responsible for accurately measuring fluorescence signals during each cycle. Variability in the sensitivity, calibration, or uniformity of the excitation and detection optics across the block can lead to significant differences in recorded fluorescence. This optical variability directly impacts the determination of the Cq value, a cornerstone of quantitative analysis, and can affect the dynamic range and limit of detection of your assay [23].

4. How can I minimize variability introduced by the thermocycler? To minimize instrument-derived variability, adhere to the following practices:

- Regular Calibration and Maintenance: Follow the manufacturer's schedule for thermal and optical calibration to ensure the instrument performs to specification.

- Use a Single Instrument per Study: For a multi-instrument study, use the same make and model with identical protocols and validate consistency across them beforehand.

- Include Proper Controls: Always run a standard curve and positive controls on every plate to monitor inter-assay performance and efficiency [25] [26].

- Position Replicates Carefully: When setting up technical replicates, distribute them across different locations on the block to average out any spatial heterogeneity in temperature or optics.

Troubleshooting Guide: Thermocycler-Related Issues

| Problem | Potential Thermocycler-Related Cause | Recommended Solution |

|---|---|---|

| High inter-assay variation (results not reproducible between runs) | Temperature calibration drift over time; inconsistent block uniformity. | Perform instrument calibration and maintenance as recommended by the manufacturer. Use a multi-position thermometer to verify block uniformity [12]. |

| High variation between replicates on the same plate | Poor spatial uniformity of temperature across the block; inconsistencies in optical scanning. | Distribute replicate samples across different well positions. Contact technical support for optical and thermal performance verification [23]. |

| Low amplification efficiency | Suboptimal or fluctuating temperatures during critical steps (e.g., annealing, extension). | Verify and calibrate the instrument. Ensure the programmed protocol (times, temperatures) matches the validated method for your assay [12]. |

| Non-specific amplification or primer-dimers | Inaccurate temperature control during low-stringency steps like annealing. | Verify the actual block temperature during the annealing step. Use a thermal gradient function to empirically determine the optimal annealing temperature for your primer set [27]. |

| Inconsistent standard curve data | Combined effect of thermal and optical performance variability, affecting Cq determination. | Include a standard curve in every run instead of relying on a historical "master curve" to account for run-to-run instrumental variance [25]. |

Quantitative Data on Inter-Assay Variability

The following data, derived from a study evaluating RT-qPCR standard curves for virus detection, highlights the inherent variability between assays, which can be influenced by instrument performance. The study conducted 30 independent standard curve experiments.

Table 1: Efficiency and Variability of Viral Assays [25]

| Viral Target | Mean Efficiency (%) | Inter-assay CV for Efficiency | Key Findings |

|---|---|---|---|

| SARS-CoV-2 (N2 gene) | 90.97% | 4.38% - 4.99% | Showed the largest variability and lowest efficiency among the targets tested. |

| Norovirus GII (NoVGII) | >90% | Highest variability | Demonstrated better sensitivity but also the highest inter-assay variability in efficiency. |

| All other viruses | >90% | Variability observed | Adequate efficiency but demonstrated consistent inter-assay variability independent of viral concentration. |

Conclusion from the data: The observed heterogeneity in key parameters like efficiency underscores the necessity of including a standard curve in every experiment to obtain reliable and reproducible quantitative results, thereby controlling for instrumental and reagent variability [25].

Experimental Protocol: Verifying Thermocycler Block Uniformity

Objective: To empirically assess the temperature consistency across the thermocycler block, a major factor in reaction variability.

Materials:

- Thermocycler to be tested

- Multi-position temperature verification system (e.g., a thermal probe array or calibrated thermocouples)

- A standard PCR tube plate

Methodology:

- Setup: Place the temperature verification sensors in multiple wells across the block, following a pattern that covers corners, edges, and the center. Use a plate filled with water or a standard reaction mix to simulate realistic thermal mass.

- Programming: Program the thermocycler to run a representative protocol. This should include:

- A prolonged denaturation step (e.g., 95°C for 2 minutes).

- A high number of cycles (e.g., 30-35) with a short denaturation (e.g., 95°C for 15 sec), a typical annealing temperature (e.g., 60°C for 30 sec), and an extension (e.g., 72°C for 30 sec).

- A final hold at 4°C.

- Data Collection: Start the protocol and record the temperature measured by each sensor at each stable setpoint (denaturation, annealing, extension).

- Analysis: Calculate the mean temperature and the range (maximum minus minimum) for each setpoint across all measured positions.

Interpretation: A well-performing block should show a very narrow temperature range (e.g., < ±0.5°C) across all positions at each setpoint. A larger range indicates poor uniformity, which can be a source of well-to-well variability in your PCR results. This data should be used for preventative maintenance and to understand the performance limits of the instrument.

Workflow Visualization

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for RT-qPCR Workflow Variance Reduction

| Item | Function in Variance Reduction |

|---|---|

| Quantitative Synthetic RNA Standards | Provides an absolute reference for generating standard curves in every run, controlling for inter-assay variability in efficiency [25]. |

| TaqMan Fast Virus 1-Step Master Mix | Pre-mixed, optimized reagents reduce pipetting steps and handling errors. "Fast" formulations can shorten protocols, potentially reducing cumulative temperature-related variability [25]. |

| Validated Primer & Probe Sets | Assays with well-characterized performance and minimal primer-dimer formation enhance specificity and consistency, reducing noise in the data [27]. |

| Instrument Calibration Kits | Specialized tools for verifying the thermal and optical performance of the thermocycler, ensuring it operates within specified tolerances. |

| Nuclease-Free Water | A pure, uncontaminated water source is critical for preventing degradation of RNA templates and reaction components, a major source of failed reactions. |

| High-Quality RNA Isolation Kits | Consistent and pure RNA extraction is the first step to reliable reverse transcription. Kits with built-in genomic DNA removal steps add another layer of specificity [28]. |

The Role of MIQE Guidelines in Standardizing Experimental Reporting

Quantitative real-time PCR (qPCR) and its reverse transcription variant (RT-qPCR) represent gold standard techniques in molecular biology for detecting and quantifying nucleic acids. However, the reliability of thousands of peer-reviewed publications has been compromised by inadequate reporting of experimental details and the use of flawed protocols. The Minimum Information for Publication of Quantitative Real-Time PCR Experiments (MIQE) guidelines were established in 2009 to address these critical issues by providing a standardized framework for conducting, documenting, and reporting qPCR experiments. By promoting experimental transparency and ensuring consistency between laboratories, MIQE compliance helps maintain the integrity of the scientific literature and is particularly crucial for reducing workflow variances in RT-PCR experiments.

FAQs: Understanding MIQE Guidelines

What are the MIQE guidelines and why were they created?

The MIQE (Minimum Information for Publication of Quantitative Real-Time PCR Experiments) guidelines are a set of standards devised to improve the quality and transparency of quantitative real-time PCR experiments. They were created in response to a growing concern about the technical adequacy of qPCR data published in scientific literature, which was often insufficiently documented, making it impossible to reproduce results or evaluate their validity. The guidelines cover all aspects of qPCR experiments, from experimental design and sample preparation to data analysis and reporting. Following these guidelines ensures that experiments are well-documented and that results can be independently verified by other scientists, which is essential for advancing scientific knowledge.

What are the most critical MIQE requirements for RT-qPCR experiments?

For reverse transcription quantitative PCR (RT-qPCR) experiments, several MIQE requirements are particularly critical for ensuring data reliability. These include detailed documentation of sample processing and storage conditions, accurate assessment of RNA integrity and quality, demonstration of the absence of inhibitors, comprehensive description of reverse transcription reaction conditions, provision of primer and probe sequences, validation of amplification efficiency using calibration curves, and proper normalization using validated reference genes. The guidelines also emphasize the importance of including appropriate controls such as no template controls and no amplification controls with every experiment.

How do MIQE guidelines address the problem of normalization in qPCR?

Normalization is an essential component of reliable qPCR data analysis, and the MIQE guidelines provide specific recommendations for this critical step. The guidelines emphasize that mRNA data should be normalized using reference genes that must be experimentally validated for particular tissues or cell types under specific experimental conditions. Unless fully validated single reference genes are used, normalization should be performed against multiple reference genes chosen from a sufficient number of candidate reference genes tested from independent pathways. The use of fewer than three reference genes is generally not advisable, and reasons for choosing fewer must be specifically addressed. For large-scale miRNA expression profiling experiments, normalization should be performed against the mean expression value of all expressed miRNAs.

What technical validation does MIQE require for qPCR assays?

MIQE requires comprehensive technical validation of qPCR assays to ensure their specificity, efficiency, and sensitivity. Essential validation parameters include demonstration of primer specificity through in silico analysis and empirical methods, determination of PCR efficiency using calibration curves with reported slope, y-intercept, and correlation coefficients, establishment of the linear dynamic range, and determination of the limit of detection. The guidelines also require reporting the method for Cq determination, outlier identification and treatment, and results for no template controls. For assays using intercalating dyes like SYBR Green I, confirmation of amplification specificity through melt curve analysis is essential.

Troubleshooting Guides

Normalization Strategy Selection

Table 1: Comparison of Normalization Strategies for qPCR Experiments

| Normalization Method | Principle | Advantages | Limitations | Recommended Use Cases |

|---|---|---|---|---|

| Single Reference Gene | Uses one constitutively expressed gene for calibration | Simple, requires minimal resources | Prone to error if reference gene expression varies; unreliable without validation | Only when a single gene has been rigorously validated for specific conditions |

| Multiple Reference Genes | Uses the geometric mean of several stable genes | More robust than single gene; reduces bias | Requires identification and validation of multiple suitable genes | Most gene expression studies; recommended default approach |

| Total RNA Measurement | Normalizes to total RNA quantity input | Does not require stable reference genes | Assumes constant cellular transcriptome; does not account for RNA quality variations | Initial calibration; not recommended as sole method for final analysis |

| Quantile Normalization | Assumes identical expression distribution across samples | Data-driven; does not require pre-selected genes | Requires large gene sets; may obscure biological variations | High-throughput qPCR with hundreds of targets |

| Rank-Invariant Normalization | Uses genes with stable rank order across samples | Data-driven; adapts to experimental conditions | Requires sufficient sample numbers for stable gene selection | Experiments with multiple samples and sufficient reference targets |

Problem: Inconsistent results between replicates and experiments. Solution: Implement comprehensive assay validation as required by MIQE guidelines. Develop calibration curves with serial dilutions to determine amplification efficiency (should be 90-110%), linear dynamic range (R² > 0.99), and limit of detection. Include inter-run calibrators when samples cannot be analyzed in the same run to correct for run-to-run variations.

Problem: Unstable normalization with traditional housekeeping genes. Solution: Systematically validate multiple candidate reference genes (minimum of 3) for your specific experimental conditions using algorithms such as GeNorm or NormFinder. Avoid using commonly employed reference genes like GAPDH or ACTB without experimental validation, as their expression can vary significantly across different tissues and experimental conditions.

Problem: High variability in standard curves between experiments. Solution: Include a standard curve in every qPCR run rather than relying on historical curves or master curves. Recent research demonstrates that although standard curves may show adequate efficiency (>90%), significant inter-assay variability still occurs, which can substantially impact quantitative accuracy, particularly in applications requiring precise quantification such as wastewater-based epidemiology or viral load monitoring.

Sample Quality and Integrity Issues

Problem: Degraded RNA samples affecting quantification accuracy. Solution: Implement rigorous RNA quality assessment using methods such as microfluidics-based systems or 3':5'-type assays. Report RNA Integrity Numbers (RIN/RQI) for all samples and avoid quantitatively comparing RNAs with widely dissimilar quality (e.g., RIN values of 4.5 versus 9.5). Use specialized extraction protocols for specific RNA types such as miRNAs, as extraction efficiency is reagent-dependent.

Problem: Presence of inhibitors in nucleic acid preparations. Solution: Test each sample or representative samples for the absence of inhibitors using either an "alien" spike or a dilution series of target genes. The extent of residual genomic DNA contamination must be reported, ideally for each sample, by comparing quantification cycles obtained with and without reverse transcription for each nucleic acid target.

Data Analysis and Interpretation Challenges

Problem: High variability in fluorescence thresholds and Cq determination. Solution: Establish consistent threshold setting methods across all experiments. While many qPCR instruments provide automated threshold settings, these may require manual adjustment to ensure consistency across runs. Document and report the method used for Cq determination, as this represents a significant source of inter-assay variability.

Problem: Inadequate experimental design leading to confounding technical variation. Solution: Implement a sample maximization strategy (running as many samples as possible in the same run) rather than a gene maximization strategy (analyzing multiple genes in the same run) to minimize technical, run-to-run variation between different samples when comparing gene expression levels.

Experimental Protocols for MIQE Compliance

Reference Gene Validation Protocol

- Select Candidate Genes: Choose 3-8 candidate reference genes from different functional pathways to reduce the chance of co-regulation.

- Design Sequence-Specific Primers: For plant genomes with homologous genes, design primers based on single-nucleotide polymorphisms present in all homologous sequences to ensure specificity.

- Establish Optimal PCR Conditions: Optimize primer sequences, annealing temperatures, primer concentrations, and cDNA concentration range for each reference gene.

- Generate Standard Curves: Create serial dilutions of cDNA to generate standard curves for each primer pair. The optimal standard curve should achieve R² ≥ 0.9999 and amplification efficiency (E) = 100 ± 5%.

- Evaluate Expression Stability: Use algorithms such as GeNorm, NormFinder, or BestKeeper to evaluate the expression stability of candidate reference genes under specific experimental conditions.

- Determine Optimal Number of Reference Genes: Calculate the pairwise variation (V) between sequential normalization factors to determine the optimal number of reference genes required for reliable normalization.

qPCR Assay Validation Protocol

Primer Specificity Validation:

- Perform in silico specificity analysis using BLAST or similar tools.

- Validate empirically using gel electrophoresis, melt curve analysis, or sequencing of amplification products.

- Confirm absence of primer-dimer formation in no template controls.

Efficiency Determination:

- Prepare a 5-10 point serial dilution series (at least 5 orders of magnitude) of template cDNA.

- Run each dilution in duplicate or triplicate across the same plate.

- Plot Cq values against log template concentration and calculate efficiency from the slope: Efficiency = (10^(-1/slope) - 1) × 100%.

- Acceptable efficiency ranges from 90-110% with R² > 0.99.

Dynamic Range and Sensitivity Assessment:

- Determine the linear dynamic range where amplification efficiency remains constant.

- Establish the limit of detection (LOD) as the lowest concentration where 95% of positive replicates are detected.

- Determine the limit of quantification (LOQ) based on replicate analysis in a standard curve.

Essential Research Reagent Solutions

Table 2: Key Reagents and Materials for MIQE-Compliant qPCR Experiments

| Reagent/Material | Function | MIQE Compliance Considerations |

|---|---|---|

| Nucleic Acid Extraction Kits | Isolation of high-quality RNA/DNA | Document complete protocol including any modifications; report quality metrics (RIN, A260/280 ratios) |

| Reverse Transcriptase | cDNA synthesis from RNA templates | Report manufacturer, concentration, reaction conditions, priming method (oligo-dT, random hexamers, or gene-specific) |

| qPCR Master Mix | Provides components for amplification | Report manufacturer, formulation, concentration of components (Mg²⁺, dNTPs); specify whether contains ROX reference dye |

| Sequence-Specific Primers | Target amplification | Report sequences, concentrations, manufacturer, purification method; validate specificity |

| Hydrolysis Probes | Specific detection of amplified targets | Report sequences, modifications, dye identities, and quenchers; concentration in final reaction |

| Validated Reference Genes | Normalization of sample-to-sample variation | Report identity, validation data for specific experimental conditions; use multiple validated genes |

| Quantification Standards | Standard curve generation for absolute quantification | Document source, preparation method, stability testing; use for efficiency determination |

Workflow Diagrams for MIQE Implementation

MIQE-Compliant qPCR Workflow

Reference Gene Selection and Validation Process

The implementation of MIQE guidelines represents a critical step toward enhancing the reliability and reproducibility of qPCR-based research. By providing a comprehensive framework for experimental design, execution, and reporting, these guidelines address the principal sources of variance in RT-PCR workflows. The troubleshooting guides, standardized protocols, and analytical frameworks presented in this technical support center provide researchers with practical tools to overcome common challenges in qPCR experiments. As the field continues to evolve with emerging technologies such as digital PCR, the principles embodied in the MIQE guidelines remain essential for maintaining scientific rigor and ensuring that research findings are technically sound, reproducible, and meaningful to the scientific community.

Implementing a Standardized and Robust RT-qPCR Workflow

Within the context of reducing variance in the RT-PCR workflow, the initial RNA extraction step is arguably the most critical. The purity, integrity, and quantity of the isolated RNA directly influence the accuracy and reproducibility of all subsequent data. This guide addresses common challenges and provides targeted troubleshooting strategies to ensure that your RNA extraction process yields reliable, high-quality material, thereby minimizing experimental variance at its source.

Troubleshooting Guide: Common RNA Extraction Issues

FAQ: How can I prevent RNA degradation during extraction? RNA is highly susceptible to degradation by RNases, which are ubiquitous and stable enzymes [29].

- Solution:

- RNase Decontamination: Decontaminate your workspace, pipettes, and equipment before starting. Use a specialized RNase decontamination solution, wiping down surfaces and then following with dH₂O or 70% ethanol [29].

- Proper PPE: Always wear clean gloves and a lab coat. Change gloves frequently, especially after touching potentially contaminated surfaces, and never touch your face or hair with gloved hands [29].

- Inhibit Endogenous RNases: Homogenize samples immediately upon collection in a chaotropic lysis buffer (e.g., containing guanidinium isothiocyanate) or flash-freeze them in liquid nitrogen. For tissues, stabilization solutions like RNAlater can be used to protect RNA before homogenization [30].

- Solution:

FAQ: My RNA has low purity, as indicated by abnormal A260/A280 and A260/A230 ratios. What does this mean and how can I fix it? Spectrophotometric absorbance ratios are key indicators of RNA purity and can reveal specific contaminants [31].

- Solution: The table below interprets common ratio abnormalities and their solutions.

| Purity Ratio | Ideal Value | Low Value Indicates | Troubleshooting Action |

|---|---|---|---|

| A260/A280 | ~2.0 (for RNA) [31] | Protein or phenol contamination [29] [31] | Ensure proper phase separation during phenol-chloroform extraction; avoid aspirating the interphase or organic layer [32]. |

| A260/A230 | 2.1 - 2.3 [31] | Contaminants like chaotropic salts (e.g., guanidinium), EDTA, or carbohydrates [31] | Perform additional wash steps with ethanol-based buffers during column purification; ensure complete removal of the wash buffer before elution [30]. |

FAQ: How do I know if my RNA is intact and not fragmented? While purity is important, it does not guarantee the RNA is intact. Integrity refers to the RNA being largely unfragmented [31].

- Solution:

- Agarose Gel Electrophoresis: Visualize the RNA. Intact total RNA should show sharp, clear ribosomal RNA bands (28S and 18S in mammalian RNA), with the 28S band approximately twice the intensity of the 18S band.

- Automated Electrophoresis Systems: For a more quantitative measure, use systems like the Agilent Bioanalyzer, which provides an RNA Integrity Number (RIN). A RIN of 7 or higher is generally recommended for sensitive downstream applications like qRT-PCR [30].

- Solution:

FAQ: My RNA yield is lower than expected. What are the potential causes? Several factors during sample handling and processing can lead to poor RNA recovery.

- Solution:

- Incomplete Homogenization: Ensure tissues are thoroughly ground to a fine powder under liquid nitrogen and homogenized completely in lysis buffer. For fibrous tissues, use a more vigorous disruption method [33].

- Overloaded Column: Do not exceed the binding capacity of the silica membrane in column-based kits [30].

- Incomplete Elution: When using column-based kits, ensure the elution buffer is applied to the center of the membrane and let it incubate for 1-2 minutes before centrifugation to increase yield [30].

- Solution:

FAQ: How can I effectively remove genomic DNA contamination from my RNA prep? Contaminating DNA can lead to false-positive signals in RT-PCR and qPCR assays [30].

- Solution: The most effective and convenient method is to perform an on-column DNase digestion during the extraction protocol. This involves adding a DNase I solution directly onto the silica membrane and incubating to digest any bound DNA, which is then washed away [30]. This is generally more efficient and results in higher RNA recovery than performing digestion after the RNA has been purified.

Essential Protocols for High-Quality RNA Extraction

Protocol 1: RNA Extraction from Fresh/Frozen Tissue using TRIzol

This classic phenol-guanidinium method is robust and applicable to a wide variety of sample types [32].

- Sample Preparation: Rapidly freeze tissue in liquid nitrogen. Grind the tissue to a fine powder using a mortar and pestle, keeping the tissue frozen by adding liquid nitrogen [32].

- Homogenization: Transfer the powder to a tube containing TRIzol reagent (e.g., 1 mL per 50-100 mg of tissue) and homogenize completely until no visible tissue fragments remain [30].

- Phase Separation: Incubate the homogenate for 5 minutes at room temperature. Add chloroform (0.2 mL per 1 mL of TRIzol). Cap the tube securely and shake vigorously for 15 seconds. Incubate for 2-3 minutes [32].

- Centrifugation: Centrifuge at 12,000 × g for 15 minutes at 4°C. The mixture will separate into three phases: a lower red phenol-chloroform phase, an interphase, and a colorless upper aqueous phase containing the RNA [32].

- RNA Precipitation: Transfer the aqueous phase only to a new tube. To avoid contamination, carefully aspirate the supernatant without disturbing the interphase. Add an equal volume of 100% isopropanol (and 1 μL of glycogen as a coprecipitant) to the aqueous phase. Incubate at -20°C for at least 30 minutes to precipitate the RNA [32].

- RNA Pellet: Centrifuge at 12,000 × g for 30 minutes at 4°C. A gel-like RNA pellet should form at the bottom of the tube.

- Wash: Carefully decant the supernatant. Wash the pellet with 75% ethanol (prepared with RNase-free water) by vortexing and centrifuging at 7,500 × g for 5 minutes at 4°C.

- Redissolution: Air-dry the pellet briefly for 5-10 minutes (do not over-dry). Dissolve the pure RNA in RNase-free water or TE buffer pH 7.5 [29].

Protocol 2: Column-Based RNA Extraction (e.g., Silica Membrane Kits)

This method is simpler, faster, and avoids the use of toxic phenol, making it ideal for routine use [29] [30].

- Lysate Preparation: Lyse and homogenize your sample in a guanidinium-based lysis buffer containing a denaturant and β-mercaptoethanol [33] [30].

- Optional DNase Digestion: For protocols involving an on-column DNase digestion, apply the DNase I incubation mix directly to the center of the silica membrane and incubate for 15 minutes at room temperature [30].

- Bind RNA: Apply the lysate (often mixed with ethanol) to the spin column and centrifuge. RNA binds to the silica membrane, while contaminants pass through.

- Wash: Perform two wash steps using ethanol-based wash buffers. Centrifuge after each wash to remove the flow-through [30].

- Elute: Transfer the column to a clean collection tube. Apply RNase-free water or elution buffer to the center of the membrane, let it stand for 1-2 minutes, and centrifuge to elute the purified RNA [30].

RNA Extraction and Assessment Workflow

The following diagram illustrates the key decision points and quality control checkpoints in a robust RNA extraction workflow.

The Scientist's Toolkit: Essential Reagents and Kits

The table below summarizes key reagents and materials used for successful RNA extraction.

| Item | Function & Rationale |

|---|---|

| RNase Decontamination Solution (e.g., RNaseZAP) | Inactivates RNases on work surfaces, pipettors, and equipment to prevent introduction of external RNases [29] [30]. |

| Chaotropic Lysis Buffer (e.g., with Guanidinium) | Powerful denaturant that inactivates RNases, disrupts cells, and dissociates nucleoproteins, releasing RNA while protecting it from degradation [30] [32]. |

| RNase-Free Tubes and Tips | Certified to be free of RNases, preventing the introduction of contaminants during liquid handling [29]. |

| TRIzol Reagent | Mono-phasic solution of phenol and guanidine isothiocyanate. Effective for simultaneous disruption of cells, denaturation of proteins, and isolation of RNA from DNA and proteins [30] [32]. |

| Silica Membrane Spin Columns | selectively bind RNA under high-salt conditions, allowing contaminants to be washed away. Provides a rapid, phenol-free purification method [30]. |

| DNase I (RNase-Free) | Enzyme that digests contaminating genomic DNA. On-column treatment is efficient and avoids additional purification steps [30]. |

| RNase-Free Water | Used to elute and dissolve purified RNA. Free of RNases and other contaminants that could affect downstream applications or accurate quantification [29]. |

Optimizing RNA Storage for Long-Term Stability

Even after successful extraction, improper storage can lead to RNA degradation and introduce variance.

- Storage Buffer: For long-term storage, dissolve RNA in RNase-free water or a slightly alkaline buffer like TE (pH 7.5). Avoid acidic conditions, as RNA is susceptible to hydrolysis at low pH [29].

- Temperature: For long-term storage, keep RNA at -70°C to -80°C. For short-term use (up to a month), RNA is stable at 4°C or -20°C [29].

- Handling: Create single-use aliquots to avoid repeated freeze-thaw cycles, which can degrade RNA and lead to accidental RNase contamination [30].

FAQ: Core Protocol Selection

What is the fundamental difference between one-step and two-step RT-PCR?

In one-step RT-PCR, the reverse transcription (RT) and the polymerase chain reaction (PCR) are combined in a single tube and buffer, using a reverse transcriptase along with a DNA polymerase. In contrast, two-step RT-PCR performs these two steps in separate tubes, with individually optimized buffers and reaction conditions [34] [35].

How do I choose between a one-step and a two-step protocol?

The choice depends on your experimental goals, sample characteristics, and throughput needs. The table below summarizes the key differences to guide your decision.

Table 1: Comprehensive Comparison of One-Step vs. Two-Step RT-PCR

| Feature | One-Step RT-PCR | Two-Step RT-PCR |

|---|---|---|

| Workflow & Setup | Combined reaction in a single tube [34] [36]. | Separate, optimized reactions for RT and PCR [34] [36]. |

| Priming Strategy | Only gene-specific primers [34] [37]. | Choice of oligo(dT), random hexamers, or gene-specific primers [34] [35] [36]. |

| Handling Time | Limited hands-on time; faster setup [36] [37]. | More setup and hands-on time [36] [37]. |

| Risk of Contamination | Lower risk due to single, closed-tube reaction [34] [36]. | Higher risk due to multiple open-tube steps and pipetting [34] [36]. |

| Sample Throughput | Ideal for high-throughput processing of many samples [34] [36]. | Less amenable to high-throughput applications [34] [36]. |

| cDNA Archive | No stable cDNA pool is generated; must use fresh RNA for new targets [34] [37]. | A stable cDNA pool is created and can be stored for future analysis of multiple targets [34] [36]. |

| Target Flexibility | Limited to detecting a few targets per RNA sample [34]. | Ideal for analyzing multiple genes from a single RNA sample [34] [37]. |

| Reaction Optimization | A compromise between RT and PCR conditions; harder to optimize [34] [37]. | Easier optimization of each step independently; more flexible [34] [37]. |

| Sensitivity | Can be less sensitive due to compromised reaction conditions [34]. | Often higher sensitivity and cDNA yield [34] [37]. |

| RNA Sample Quality | Committed to the initial RNA input; sensitive to inhibitors [36] [37]. | RNA input can be adjusted; cDNA can be repurified to remove inhibitors [36]. |

The following workflow diagram illustrates the key procedural differences between the two methods.

FAQ: Protocol Optimization and Troubleshooting

Why is my cDNA yield low or absent?

Low cDNA yield can result from several factors related to RNA quality and reaction conditions.

- Degraded RNA: Check RNA integrity by gel electrophoresis or a bioanalyzer. Minimize freeze-thaw cycles and use RNase-free reagents and techniques to prevent degradation [5] [38].

- RNA Secondary Structures: Denature secondary structures by heating the RNA to 65°C for ~5 minutes before reverse transcription, then place it immediately on ice [5].

- Suboptimal Reverse Transcription Temperature: Use a thermostable reverse transcriptase and perform the reaction at a higher temperature (e.g., 50°C or higher) to minimize secondary structures and improve primer binding specificity [5].

- Incorrect Priming Strategy: Ensure your priming method matches your template. Use random primers for bacterial RNA or transcripts without a poly(A) tail, and a mix of random and oligo(dT) primers to improve coverage and efficiency [5] [38].

- Presence of Reverse Transcriptase Inhibitors: Repurify the RNA sample to remove contaminants like salts, alcohols, or phenols. Alternatively, dilute the input RNA to reduce inhibitor concentration, or use a reverse transcriptase known for its resistance to inhibitors [5].

Why am I detecting genomic DNA in my results?

Genomic DNA (gDNA) contamination can lead to false-positive results and inaccurate quantification.

- Solution 1: DNase Treatment: Treat your RNA samples with DNase I prior to the reverse transcription reaction. Ensure the DNase is thoroughly inactivated or removed afterward [35] [5].

- Solution 2: Primer Design for qPCR: For the qPCR step, design primers to span an exon-exon junction. This ensures that amplification will only occur from the processed cDNA, not from the gDNA which contains introns [35].

- Solution 3: Include a "No-RT" Control: Always run a control reaction that contains all components except the reverse transcriptase. Amplification in this control indicates the presence of contaminating DNA [35] [5].

Why is my cDNA truncated or poorly representing my target?

This issue often relates to RNA integrity or the enzyme's ability to synthesize long transcripts.

- Poor RNA Integrity: As with low yield, start by verifying RNA integrity [5].

- Low-Purity RNA Sample: Re-purify RNA to remove contaminants that can interrupt the reverse transcriptase [5].

- Suboptimal Reverse Transcriptase: Select a high-performance reverse transcriptase with high processivity and low RNase H activity for synthesizing long, full-length cDNA transcripts [5].

The Scientist's Toolkit: Essential Reagents and Materials

Table 2: Key Research Reagent Solutions for cDNA Synthesis

| Reagent / Material | Function | Key Considerations |

|---|---|---|

| High-Quality RNA Template | The starting material for cDNA synthesis. Integrity and purity are critical. | Assess quality via A260/A280 ratio and gel electrophoresis. Use nuclease-free water for resuspension [5] [39]. |

| Reverse Transcriptase | Enzyme that synthesizes cDNA from an RNA template. | Choose enzymes with high thermal stability, sensitivity, and resistance to inhibitors. Consider RNase H activity for specific applications [35] [5]. |

| Primers (Oligo(dT), Random, Gene-Specific) | Provides a starting point for the reverse transcriptase. | Oligo(dT): For mRNA with poly-A tails. Random Hexamers: For all RNA types, good for degraded samples. Gene-Specific: For maximum target yield in one-step protocols [35] [36]. |

| RNase Inhibitor | Protects the RNA template from degradation during the reaction. | Crucial for maintaining RNA integrity, especially in lengthy protocols or with low-quality RNA samples [5]. |

| dNTPs | Building blocks for cDNA synthesis. | Use a final concentration of 0.5 mM or less to avoid inhibition of the reaction [38]. |

| No-RT Control | A critical quality control to check for gDNA contamination. | Contains all reaction components except the reverse transcriptase [35] [5]. |

Primer and Probe Design Principles for Optimal Specificity and Efficiency

Core Design Principles

Successful RT-PCR experiments depend on primers and probes designed according to established molecular principles. Adhering to these parameters ensures optimal amplification efficiency, specificity, and accurate quantification.

Table 1: Essential Design Parameters for Primers and Probes

| Parameter | Primers | Probes | Key Considerations |

|---|---|---|---|

| Length | 18–30 bases [40]; 18–24 nucleotides is ideal [41] | 15–30 bases [41]; 20–30 bases for single-quenched [40] | Long primers (>30 bp) hybridize slower and reduce efficiency [41]. |

| Melting Temperature (Tm) | 58–60°C [42]; Optimal 60–64°C [40] | 5–10°C higher than primers [40] [42] | Primer Tms should be within 1–2°C of each other [40] [42]. |

| Annealing Temperature (Ta) | 3–5°C below primer Tm [12] | N/A | Ta too low causes nonspecific binding; too high reduces efficiency [40]. |

| GC Content | 35–65% [40]; Ideal 40–60% [41] | 35–60% [40] [41] | Avoid consecutive G residues (e.g., >4 Gs) [40] [12]. |

| GC Clamp | 1–3 G/C bases in last 5 at 3' end [41] | Avoid 'G' at 5' end [40] [41] | Prevents quenching of 5' fluorophore on probes [40]. |

| Amplicon Length | 70–150 bp for optimal efficiency [40] [42] | N/A | Longer amplicons (up to 500 bp) require extended cycling times [40]. |

Specificity and Secondary Structures

- Complementarity: Screen designs for self-dimers, heterodimers, and hairpins. The ΔG value for any secondary structure should be weaker (more positive) than –9.0 kcal/mol [40].

- On-Target Binding: Always run a BLAST alignment to ensure primers are unique to the desired target sequence and to avoid off-target interactions [40] [42].

- Amplicon Location: For gene expression analysis, design assays to span an exon-exon junction to prevent amplification of contaminating genomic DNA [40] [42].

Troubleshooting Guide: FAQs and Solutions